Hammering at Daniel Deronda

This time, we are using a MALLET!

(I apologize for the pun, but it does not seem to get old).

MALLET stands for MAchine Learning for LanguagE Toolkit and is proof that, among other things, there is no such thing as an impossible acronym. MALLET is a Java-based package designed for multiple kinds of natural language processing/machine learning, including what I used it for – Topic Modeling.

So what is Topic Modeling? Well, let’s say that texts are made up of a number of topics. How many? That depends on the text. So every word in that text (with the exception of common words like “an” ) should be related to one of those topics. What MALLET does in topic modeling mode is it divides a set of texts up into X number of topics (where X is your best guesstimate on how many there should be) and outputs all the words in that topic, with a shorter list of top words for each topic. Your job, as the human, is to guess what those topics are.

For more on the idea behind topic modeling, check out Matthew Jockers’ Topic Modeling Fable for the decidedly non-technical version or Clay Templeton’s Overview of Topic Modeling in the Humanities.

Now for the second question – why am I doing it? Beyond the “well, it’s cool!” and “because I can,” that is, both of which are valid reasons especially in DH. And my third reason is a subset of the second, in a way. I want to test the feasibility of topic modeling so that, as this year’s Transcriptions Fellow*, I can help others use it in their own work. But in order to help others, I need to first help myself.

So, for the past two weeks or so, I’ve been playing around with MALLET which is fairly easy to run and, as I inevitably discovered, fairly easy to run badly. Because of the nature of topic modeling, which is less interested in tracking traditional co-occurrences of words (i. e. how often are two specific words found within 10 words of each other) and more interested in seeing text segments as larger grab-bags of words where every word is equidistant from every other**, you get the best topic models when working with chunks of 500-1000 words. So after a few less-than useful results when I had divided the text by chapters, I realized that I needed a quick way to turn a 300,000+ word text file into 300+ 1000 word text files. Why so long a text? Well, George Eliot’s Daniel Deronda is in fact a really long text. Why Daniel Deronda? Because, as the rest of this blog demonstrates, DD has become my go-to text for experimenting with text analysis (and, well, any other form of analysis). So I have MALLET, I have Daniel Deronda, I now also have a method for splitting the text thanks to my CS friends on Facebook and, finally, I have IBM’s “Many Eyes” visualization website for turning the results into human-readable graphics. All that’s missing is a place to post the results and discuss them.

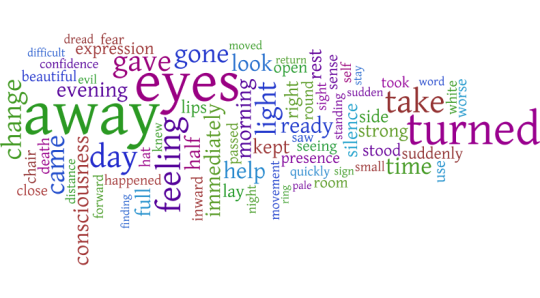

I knew Ludic Analytics would not let me down. So, without further ado, I present the 6 topics of Daniel Deronda, organized into word clouds where size, as always, represents the word’s frequency within the topic:

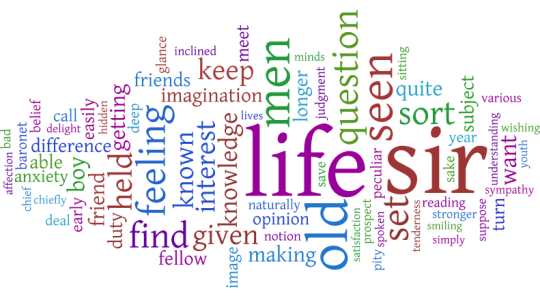

Topic 1:

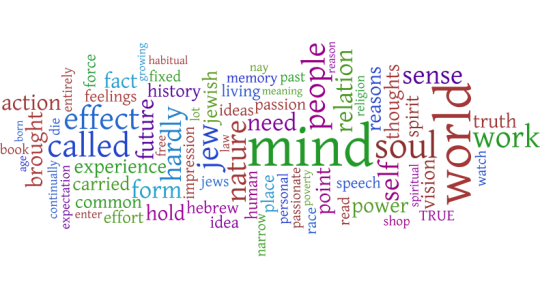

Topic 2:

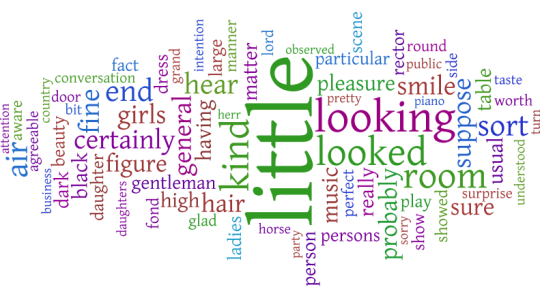

Topic 3:

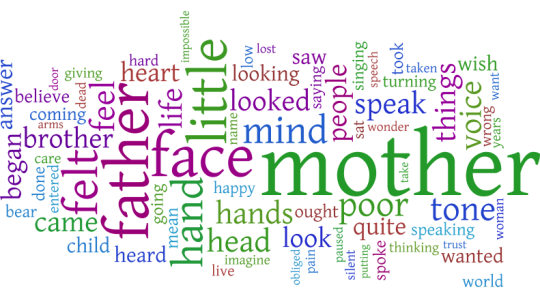

Topic 4:

Topic 5:

Topic 6:

You will notice that the topics themselves do not yet have titles, only identifying numbers. Which brings us to the problem with Topic Modeling small text sets – too few examples to really get high quality results that identify what we would think of as topics. (Also, topic modeling is apparently better when one uses a POS (parts of speech) tagger and even gets rid of everything that isn’t a noun. Or so I have heard.)

Which is not to say that I will not take a stab at identifying them, not as topics, but as people. (If you’ve never read Daniel Deronda, this may make less sense to you…)

- Daniel

- Mordecai

- Society

- Mirah

- Mirah/Gwendolen

- Gwendolen

I will leave you all with two questions:

Given the caveat that one needs a good-sized textual corpus to REALLY take advantage of topic modeling as it is meant to be used, in what interesting ways might we play with MALLET by using it on smaller corpora or single texts like this? Do the 6 word clouds above suggest anything interesting to you?

And, as a follow-up. what do you make of my Daniel Deronda word clouds? If you’ve never read the text, what would you name each topic? And, if you have read the text, what do you make of my categorizations?

—

*Oh, yes. I’m the new Graduate Fellow at the Transcriptions Center for Literature & the Culture of Information. Check us out online and tune in again over the course of the next few weeks to see some of the exciting recent developments at the Center. Just because I haven’t gotten them up onto the site yet doesn’t mean they don’t exist!

**This is a feature, not a bug. Take, for example, a series of conversation between friends and, in every conversation, they always reference the same 10 movies although not always in the same order. MALLET would be able to identify that set of references as one topic–one that the human would probably call movies–while collocation wouldn’t be able to tell that the first movie and last movie were part of the same group. By breaking a long text up into 500-1000 word chunks, we are approximating how long something stays on the same topic.