Welcome to the final post in this Ludic Analytics series on Sefaria. While my research itself is ongoing, this part of the project where experiment with the images I can make and ponder their value, has come to a close.

This post is distinct from the previous ones, which can be found here: part 1, part 2, and part 3, in that I’m finally going to move away from looking at the images themselves and focus instead on what their larger purpose is. But before I get to that, an important announcement.

All the data from this project – all three datasets, the .gexf files, the .csv exportable versions and some of the high res images are now available on my Github page for the Sefaria Visualization project. Sefaria is serious about its commitment to an open repository and I share that commitment with them. So if you want to grab any of these datasets and play around with them, please do so and I would very much like to see what you do with them.

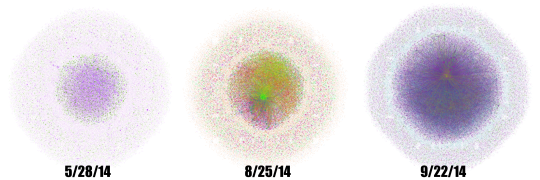

A word of caution, however. These datasets are large and Gephi requires a lot of memory. All three datasets, but especially the August and September ones, will take forever to run on a computer with less than 8GB of RAM. Run them in the background on a machine that can handle it and assume that any layout algorithm other than plotting data points on a 2D plane will take some time to render. So, if you’re like me, and started doing this on a 2011 MacBook Air with 4GB of memory…don’t. And if you are familiar with software other than Gephi and prefer to use that, be my guest and do let me know about it.

All the data can be found here: Sefaria Data Visualization Project.

And now, onwards!

What is the value of this research?

As we all know, answering broad and general questions is difficult, so let’s break this one down a bit.

1. How does this kind of work – making visualizations and thinking about networked Jewish text – enhance the traditional experience of studying Jewish texts in a Jewish environment?

2. How can an academic researcher make use of these visualizations and to what degree does she need to become an expert in network theory to do so?

There. That’s much less intimidating.

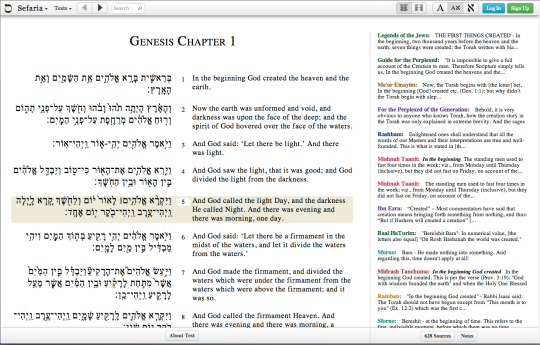

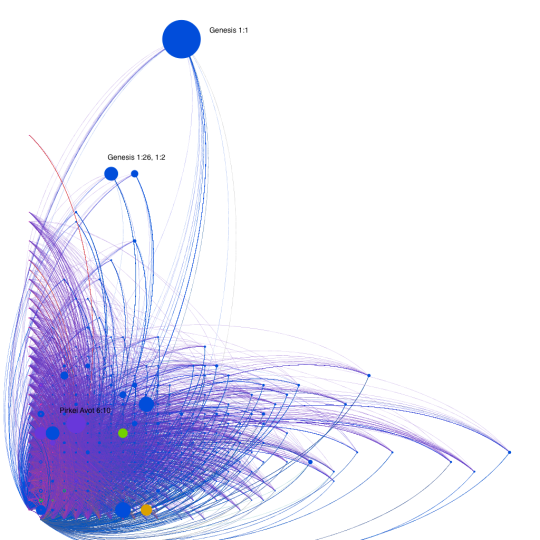

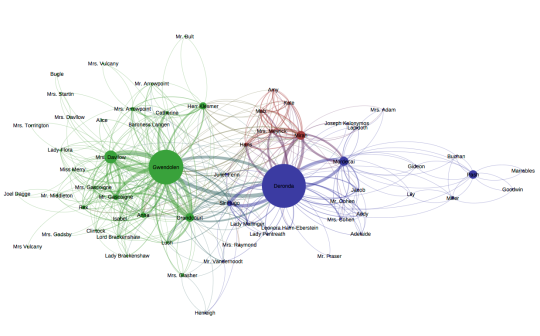

Going in order, the first question really asks whether this kind of work has value within the traditional classroom. Given that the teaching of Jewish texts often focuses on the micro level and dwells on one topic for a very long time, this kind of visualization work seems like an important counterpoint to that kind of study. If students, as part of their explorations of Jewish texts, are learning how to trace a legal ruling from its source in the Bible to the modern day responsa on the topic, turning that unbroken line of tradition into a network that they can see could be really interesting. Rather than thinking linearly, they can look at specific ideas as clusters. And, starting with one of those groups, students could begin to think in terms of idea clusters – what groups of legal decisions come from verses that are right next door to one another.

None of this is new information and all of it could, in theory, be taught without the aid of images at all. But the images make it much easier to to think in a networked way.

And this is reflective also of the change that has come about with giant repositories like the Bar Ilan CD that contain an extraordinary number of sources or even Sefaria itself. We have access to the legal system as a whole in a way that really did not exist before the age of the computer. We’re going to have to think about how we want to access that system in a way that is both true to traditional forms and takes advantage of technology.1

The goal of teaching students about Jewish texts is only partially to familiarize them with the narratives they hear in synagogue and the laws that structure their lives. The other, more difficult job is to create a bond between the student and the text(s). And we do that by making the books tangible and meaningful, but we can also do that by making the text network tangible (metaphorically speaking). If we create emotional connections through interacting with texts and those connections have a profound influence on what we learn and how,2 we should be able to build on those connections through even less traditional forms of interaction. Such as making a graph.

So why is this kind of work useful in the classroom? Because it provides another way of accessing meaningful texts, one that can help students make connections they could not otherwise see and connect with the text in a way that deepens their appreciation for it.

Yes, this approach might make understanding a particular section of Jewish law a bit easier. But I’m much more interested in the way that it reshapes our relationship with all the texts as a whole. Not exactly what we can see, but how it changes the way that we look.

Which brings me to my second question, which I have partially answered in previous posts on this topic. How does an academic make use of this research?

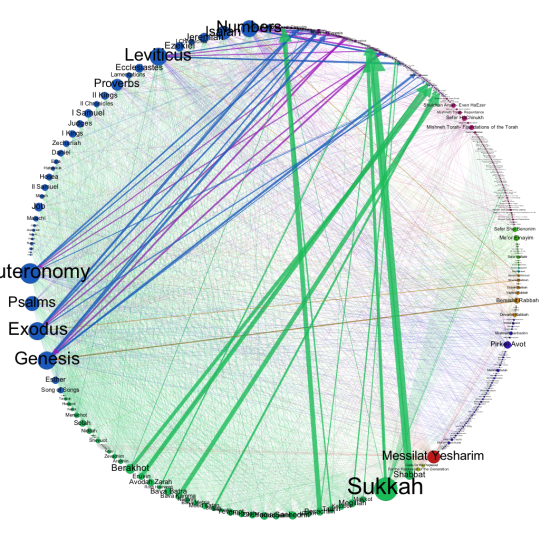

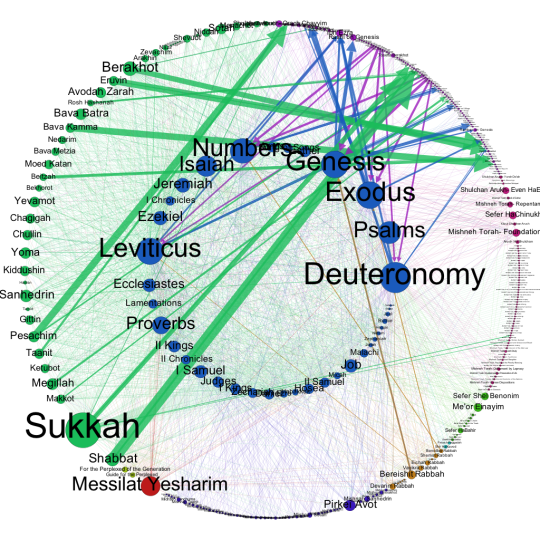

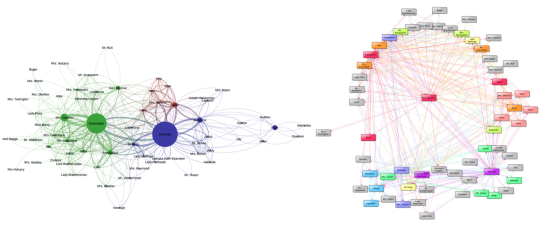

I’ve given several examples of using the graphs as pointers towards interesting features in the texts. The strangeness of tractate Sukkah that I addressed here and the connections in the 18th chapter of Tanna Debei Eliyahu that I discussed last time are good examples. Both of these are interesting features noticeable only when examining the graph and each leads to a very different kind of research. As Sara pointed out in the comments, the results I was seeing for Sukkah came from the work her Talmud students did with Sefaria. So while not a feature of the text itself, this node opens up a conversation about using Sefaria in the classroom and data collection in a large, open-source project. Conversely, research into the 18th chapter of TDE would require a very different approach as the question, as far as I can determine, involves investigating why it has a disproportionate number of prooftext, whether the topic at hand requires so many texts or, as might always be the case, something else is at work.

And this might even be enough. If a literary critic with little or know network training can use these network graphs to discover new regions of research potential and new questions to ask about the construction of corpora, then perhaps this work has already achieved its goal.

But that feels like such a weak note on which to end. Not only because it absolves us from having to learn anything new about the networks themselves, but also because there should be so much more to do with this technology other than generate ideas and make pretty pictures.

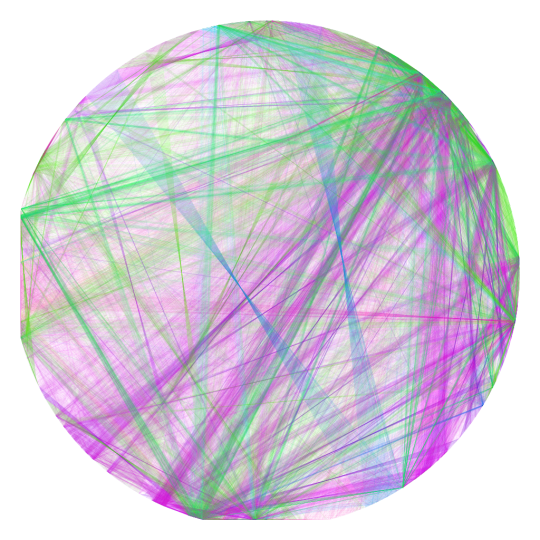

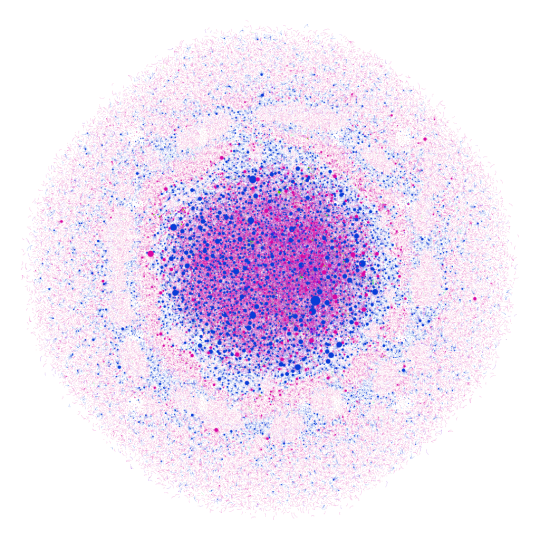

Not to discount either generating ideas or making art. The practice of displaying information in an unreadable form purely for its aesthetic appeal is valuable as an act of artistic creation. If another value of this work is a set of awesome looking pictures to hang by my desk…far be it from me to complain. They’ll look great next to the embroidered TARDIS. I said that I was in this for the visceral joy of working with texts and the delight in making things the with which I think. But I will concede that not everyone wants what I want. I think we—the academic community—see the artistic values of our work as byproducts and, overall, would prefer research methods that generate answers rather than questions. So I will address that approach as well.

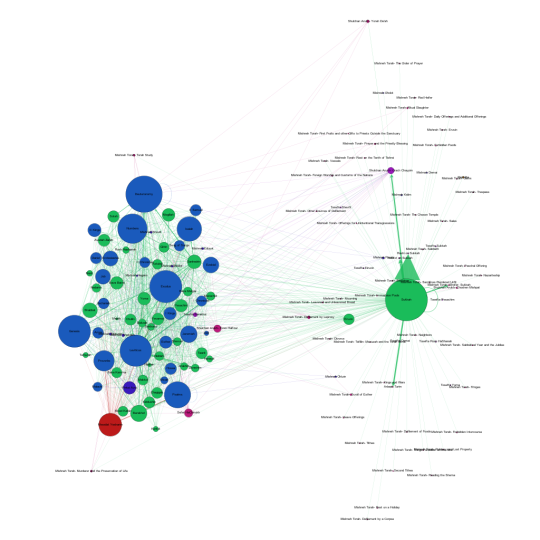

I realize that, in this conversation, I’m leaving out large swathes of digital research up to and including the WhatEvery1Says topic modeling project going at UCSB right now under the leadership of the 4Humanities group there. Using digital tools to interpret literary texts, while not free from controversy, has a pretty impressive track record and allows us to think anew about what we know and how we know it. But for many of these approaches, the images are secondary. They are elegant methods of displaying the information detailed in the critical literature itself. I’m talking about the actual value of taking information and transforming it into a visualization as a way of answering questions about a work or a corpus. To put the question another way, when is it better to see information than to read it?

And here’s where I think we start to see the value in making visualizations and knowing network theory. This kind of research is useful for destroying the linear thinking that narrative naturally invites. Database thinking (see Manovich in Language of New Media and Hayles in How We Think) has similar results in theory, but is comparatively useless to us as human beings in practice. We can’t read databases. We have tools that can, but what we end up reading or, realistically, seeing is the visual representation of the connections that are not apparent when traversing the work. Visualization breaks narrative. And sometimes, that’s what we want.

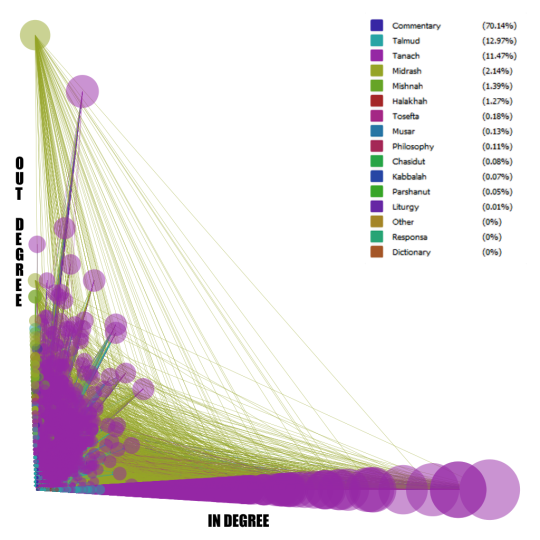

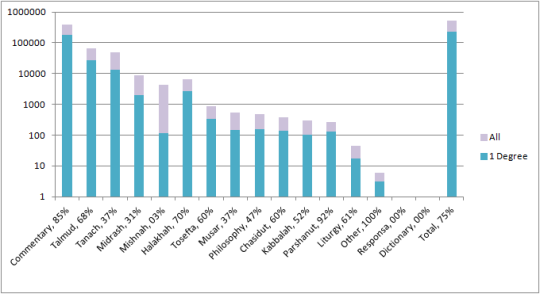

We want to break out of a narrative approach to, for example, the corpus of Jewish texts as a way of rethinking the legal, cultural and social influences that the texts have on one another. Here are some questions that, I hope, work like this might aspire us to answer.

- How accurate is the vision of the Jewish legal system as a ladder with each subsequent generation relying on the scholarship that came beforehand? Do more recent writers hearken back to the earlier legal scholars or do they go straight to the Talmud or do they skip the legal sources in their own writing and rely entirely on the Biblical texts? What, in short, does a community of scholars look like?

- Do scholars in different eras work differently? Are scholars more likely to refer to their predecessors in certain times than in others?

- How interconnected are the commentaries? How often do they quote one another?

- How interconnected is the corpus as a whole? Can you start anywhere and get back to Genesis 1:1? Which texts are inaccessible and do they share any features?

- How much of the corpus is a dead end? And are dead ends characterized by any specific features?

We can’t read this information in the texts, but we can see it by looking at the visualizations. Which brings me to the end of this series, but to the beginning, I hope, of much research. All this data is available at Github and I welcome you to use it as you see fit.

As for me? Well, I’ve a dissertation to write and the data-viz work that I’ve been doing here is going to be a big part of that. And while my next post won’t be about Sefaria per se, let’s just say I still have a lot more to talk about when it comes to making meaning using network graphs.

–

-

Last year, there was a…controversy over a specific decision made by a school principal when two of his students asked him to rule on a matter of law for them. I don’t want to get into the details of the controversy, but one of the loudest objections came from a rabbi who argued that simply having access to the sources to back up one’s opinion (he noted the Bar Ilan CD in particular) did not give someone the right to rule when more prominent rabbis disagreed with that ruling.

Leaving aside that the principal in question is absolutely not the kind of person who would scour a database for one minor dissenting opinion rather than using his own knowledge to rule as he sees fit, this argument points to a more pervasive fear within all scholarly culture.

Has the database replaced human memory? And can you really claim mastery over a topic if the mastery you have is, in fact, over the information seeking in the database?

Conversely, can you claim mastery without the database? One of the points that I think the Sefaria graph makes elegantly is that there is really is (and always has been) “Too Much to Know,” to borrow the title of Ann Blair’s book on the subject. Is human knowledge of the canonical sources better than having a functioning database of every source? How do we rank knowledge without a canon?

Given that my attitude towards technology can very broadly be summed up as “technology you do not like will not go away because you do not like it, so the only choice is to make it better”, I would argue that we need to train Jewish legal scholars in both forms of study. Legal precedent (unlike literature) has a better argument for the maintenance of the canon, but I think we do our laws and ourselves a disservice if we don’t take advantage of what technology can do and realize a system for using it to better understand and, yes, rule on Jewish law.

Still, this point applies to relatively few people – the rabbis and not-rabbis responsible for handing down legal rulings. So let’s return to pedagogy. ↩ - A claim I don’t quite have the space to back up here, but I’m working on something that will address it. It’s called my dissertation. ↩

“Hey! Listen!”

“Hey! Listen!”

Written by Liz S.

Leave a comment