MALLET redux

I considered many alternative titles for this post:

“I Think We’re Gonna Need a Bigger Corpus”

“Long Book is Long”

“The Nail is Bigger, but the MALLET Remains the Same”

“Corpo-reality: The Truth About Large Data Sets”

(I reserve the right to use that last at some later date). But there is something to be said for brevity (thank you, Twitter) and, after all, the real point of this experiment is to see what needed to be done to generate better results using MALLET. The biggest issue with the previous run–as is inevitably the case with tools designed for large-scale analysis–was that I was using a corpus that consisted of one text. So my goal, this time around, is to see what happens when I scale up. So I copied the largest 150 novels out of collection of 19th and early 20th century texts that I happened to have sitting on my hard drive and split them into 500 word chunks. (Many many thanks to David Hoover at NYU, who had provided me with those 300 texts several years ago as part of his Graduate Seminar on Digital Humanities.. As they were already stripped of their metadata, I elected to use them.) Then I ran the topic modeling command in MALLET and discovered the first big difference between working with one large book and with 150. Daniel Deronda took 20 seconds to model. My 19th Century Corpus took 49 minutes. (In retrospect, I probably shouldn’t have used my MacBook Air to run MALLET this time.)

Results were…mixed. Which is to say that the good results were miles ahead of last time and the bad results were…well, uninformative. I set the number of topics to 50 and, out of those 50 topics, 21 were not made up of a collection of people’s names from the books involved.* I was fairly strict with the count, so any topic with more than three or so names in the top 50 words was relegated to my mental “less than successful” pile. But the topics that did work worked nicely.

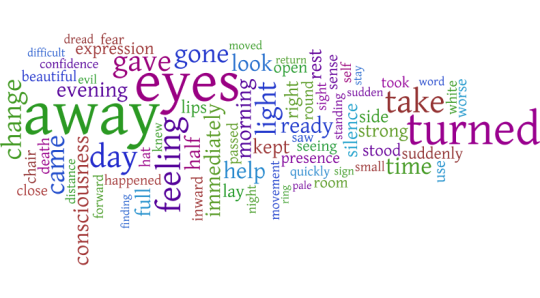

So here are two examples. The first is of a topic that, to my mind, works quite well and is easily interpretable. The second example is of a topic that is the opposite of what I want though it too is fairly easy to interpret.

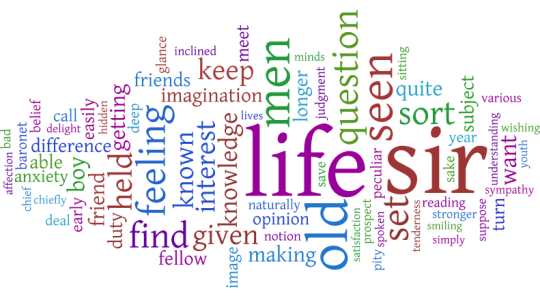

Topic #1

So, as a topic, this one seems to be about the role of people in the world. And by people, of course, we mean MEN.

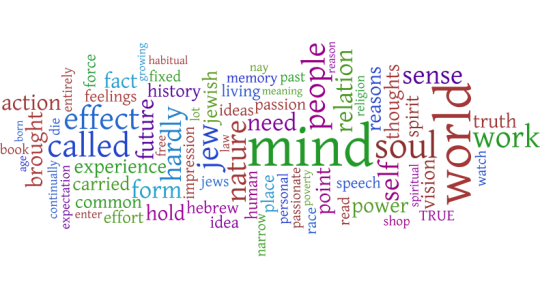

Topic #2:

Now, this requires a some familiarity with 19th century literature. This topic is “Some Novels by Anthony Trollope”. While, technically accurate, it’s not very informative, especially not compared to the giant man above. The problem is that, while it’s a fairy trivial endeavor to put the cast of one novel into a stop list, it’s rather more difficult to find every first and last name mentioned in 150 Victorian novels and take them out. In an even larger corpus (one with over 1,000 books, say), these names might not be as noticeable simply because there are so many books. But in a corpus this size, a long book like “He Knew He Was Right” can dominate a topic.

There is a solution to this problem, of course. It’s called learning how to quickly and painlessly (for a given value of both of those terms) remove proper nouns from a text. I doubt I will have mastered that by next week, but it is on my to do list (under “Learn R” which is, as with most things, easier said than done).

In the meantime, here are six more word clouds culled from my fifty. 5 of these are from the “good” set and one more is from the “bad”.

Topic #3:

Topic #4:

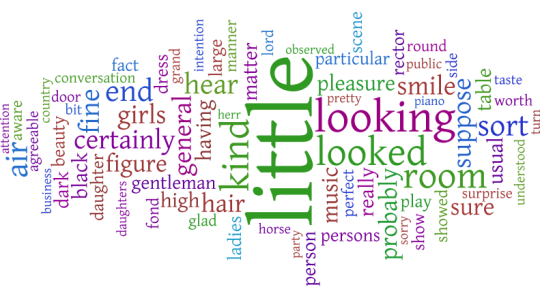

(I should note, by the way, that party appears in another topic as well. In that one, it means party as a celebration. So MALLET did dinstinguish between the two parties.)

Topic #5:

Topic #6:

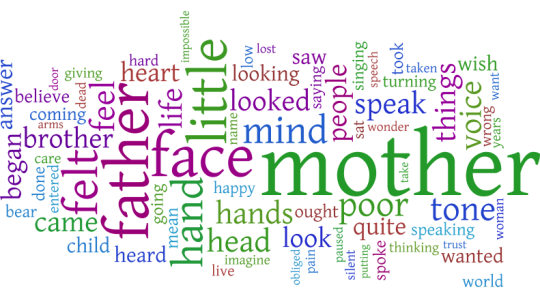

Topic #7

Topic #8:

There are 42 more topics, but since I’m formatting these word clouds individually in Many Eyes, I think these 8 are enough to start with.

So the question now on everyone’s mind (or, certainly on mine) is what do I do with these topic models? I could (and may, in some future post) take some of the better topics and look for the novels in which they are most prevalent. I could see where in the different novels reading is the dominant topic, for example. I could also see which topics, over all, are the most popular in my corpus. On another note, I could use these topics to analyze Daniel Deronda and see what kinds of results I get.

Of course, I could also just stare up at the world clouds and think. What is going on with the “man” cloud up in topic 1? (Will it ever start raining men?). Might there be some relationship between that and evolving ideas of masculinity in the Victorian era? Why is “money” so much bigger than anything else in topic #6? What does topic #7 have to say about family dynamics?

And, perhaps the most important question to me, how do you bring the information in these word clouds back into the texts in a meaningful fashion? Perhaps that will be next week’s post.

—

*MALLET allows you to add a stopwords list, which is a list of words automatically removed from the text. I did include the list, but it’s by no means a full list of every common last name in England. And, even if it was, the works of Charles Dickens included in this list would leave it utterly stymied.